GPT2 in MindSpore modelzoo

Declaration

This is an early version of Minsdpore GPT2. The final version will be merged to MindSpore’s ModelZoo.

MindSpore GPT2 has been merged to master branch of MindSpore’s official repo. Link

The official link of MindSpore ModelZoo: Link

You can find our unmerged official MindSpore ModelZoo commit here: Link Full Repo

Once it is merged to master branch of MindSpore repository, the official link is here: Link

In Brief

An implementation of GPT2 with MindSpore from scratch.OpenAI’s original paper: A Radford, J Wu, R Child, D Luan, D Amodei, Ilya Sutskever “Language Models are Unsupervised Multitask Learners” Link

Download a Mindspore-GPT2 pretrained model

The model is a ‘.ckpt’ file and has 3 different sizes. You should use a mindspore function ’load_checkpoint’ to load the parameters Download link: https://pan.baidu.com/s/1WZ0rZ0qafkUvEOScoN21YQ Download key: 1895

Generation Demo

usage

Download official image of MindSpore

Use docker to pull official MindSpore GPU image (tag: 1.0.1 is recommended for this repo)

docker pull mindspore/mindspore-gpu:1.0.1

You can run a Nvidia-docker2 container and link this repo to the file system of the container.

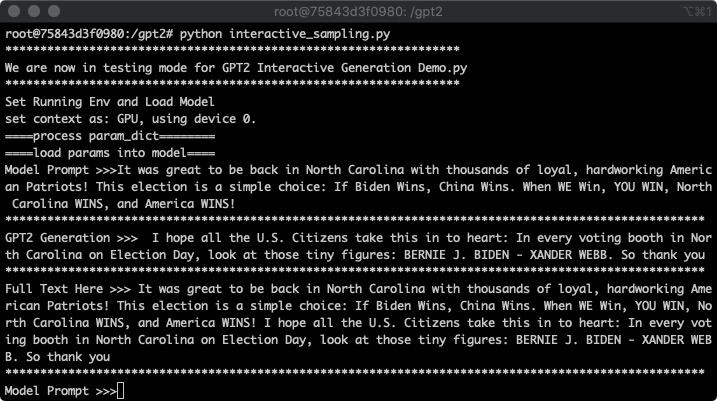

Run python file

python interactive_sampling.py

Summary Task

Performance on CNN-Dailymail (version 3.0.0)

Results on Test set

| Model | Device | Dataset Size | Finetune Rouge | OpenAI Rouge |

|---|---|---|---|---|

| small | Ascend | 11490 | 24.07 | 16.8 |

| medium | Ascend | 11490 | 25.94 | 20.6 |

| large | Ascend | 11490 | 26.81 | 21.5 |

Comparison to OpenAI’s GPT2 (TensorFlow)

| Model | Rouge-1 | Rouge-2 | Rouge-L | Rouge-AVG |

|---|---|---|---|---|

| OpenAI GPT2 XLarge | 29.34 | 8.27 | 26.58 | 21.40 |

| MindSpore GPT2 small | 30.51 | 11.37 | 30.32 | 24.07 |

| MindSpore GPT2 medium | 32.84 | 12.82 | 32.14 | 25.94 |

Project link: https://github.com/viewsetting/MindSpore-GPT2